As Moore’s Law Slows, Hedge Your Bets With Design Process Efficiency

by On Sep 17, 2015

Are you dreading the day when Moore’s Law comes to a grinding halt? I’m concerned, but I’m not as fatalistic as some.

Here’s why: There are plenty of ways to eke out more scalability in the semiconductor design process through greater efficiency.

SoC design realities make it imperative to re-evaluate mature semiconductor processes for greater efficiencies that yield lower costs, higher performance and shorter time to market. Because scaling to lower geometries won’t yield the same economic or technological benefits that have fueled the semiconductor industry in the past, it’s time to consider what else is possible to sustain innovation and growth.

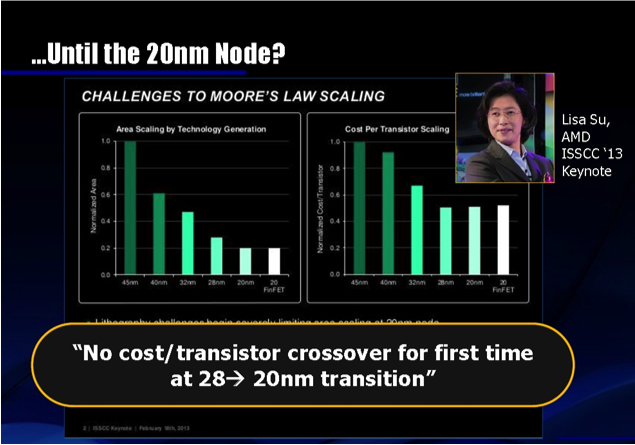

Have you heard? Moore’s Law is slowing down a little. Proof is the evidence in the slide above, presented at a recent industry conference, courtesy of Walden C. Rhines, Chairman and CEO of Mentor Graphics.

In other words, the industry needs to refocus its energies on optimizing the initial design of a chip, rather than betting on future process node shrinks to meet cost, performance and power goals.

Some teams already have adopted this approach, and below are some quantifiable benefits of an improved SoC design process:

- Productivity: Time is money in SoC design. If a design process is optimized in a way that cuts between 30 to 35 days from delivery, that team can save more than $2 million in engineering costs. Hardware and software teams consisting of 100 people cost about $2 million per month, which equals $24 million per year. A few advanced teams have found it beneficial to re-examine the way chips are designed so they can reduce schedule time, cut costs and get to market faster than the competition.

- Die Size Reduction: Even if a design is smaller than the previous generation by 3-to-4 mm², it can save millions of dollars, considering the average price for silicon real estate is approximately 10 cents per square millimeter in a 28-nanometer process. Reductions are currently providing meaningful economic advantage over competitors, even for high-volume products. One of the areas seeing the greatest benefit is in the interconnect, where the reduction of wires and logic gates cuts silicon area by as much as 50%.

- Lower Power: Cutting overall chip power consumption by as little 1 milliwatt generates tremendous advantages without the need to upgrade to advanced geometries. This tiny amount of saved energy can greatly extend the time required between battery charges in mobile devices, for example. The problem today is that most energy-saving measures are focused on the GPU and CPU. The rest of the SoC burns system idle power and contributes greatly to overall system power consumption. If energy-saving design measures are applied to the interconnect technology, which connects with all the separate IP blocks on the chip, it can significantly reduce system idle power.

- Higher Bandwidth: On-chip links scaling beyond 1.6 GHz can improve performance within subsystems that run at 600 MHz. The can also improve quality-of-service (QoS), particularly for multimedia data flows.

- Design Starts: Instead of producing one SoC every 18 months, it’s now possible to deliver derivatives of one main design every 5 to 8 months. Struggles during the late stages of the cycle are preventing some companies from multiple design starts within a calendar year. If those struggles can be eliminated, deliveries are accelerated, enabling companies to deliver higher volume and serve multiple market segments. Through advanced interconnect IP implementation, some teams are eliminating routing congestion and place-and-route issues in order to get to market much sooner.

The teams that cling to internal interconnect efforts are struggling to achieve these optimizations. They rely on legacy technology, hierarchal buses and configurable crossbars. These efforts have not been able to keep pace with rising SoC complexity during the steady march toward 14nm.

Engineers adopting advanced NoC IP in their design have been able to see the benefits. NoC interconnect fabric IP is one of the few areas left on the SoC that is configurable. Internal efforts are inevitably falling by the wayside because IP content is rising and teams assigned to the interconnect development task cannot keep up with the late stage changes and rising complexity.

Designers looking to hedge against Moore’s Law slowdown, should consider the interconnect technology that drives highest quality of results. Lower product cost and hardware architecture flexibility should rate equally high on any priority list. NoC IP is best qualified for simultaneously improving quality of results and productivity.