SemiWiki: Arteris Frames Network-On-Chip Topologies in the Car

by On Apr 10, 2024

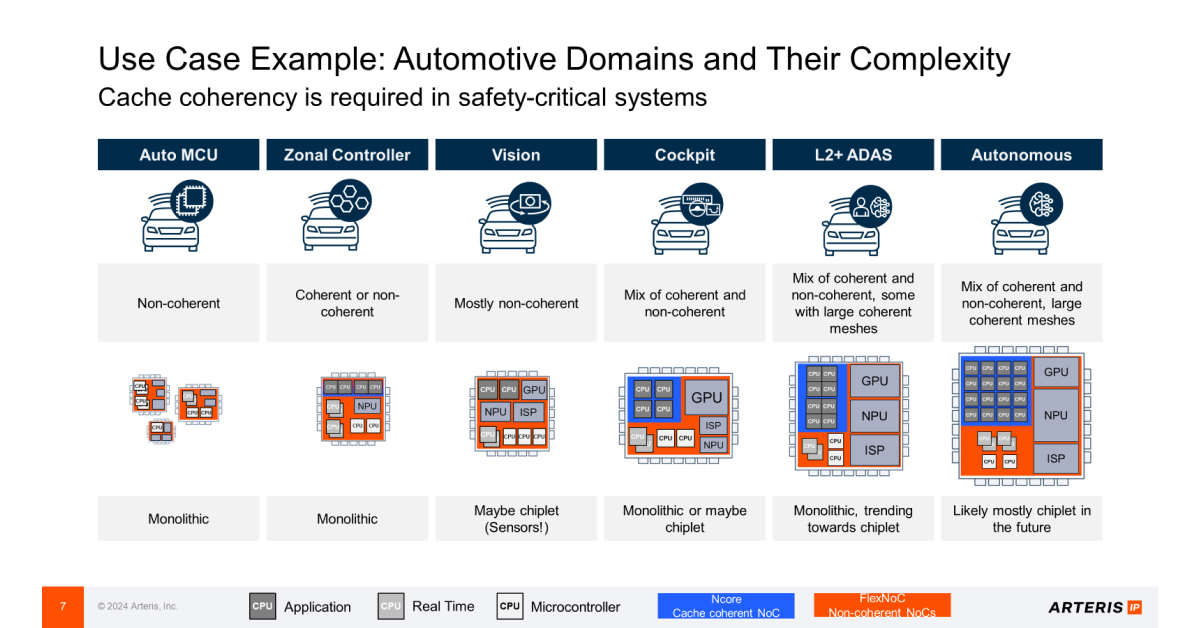

On the heels of Arm’s 2024 automotive update, Arteris and Arm announced an update to their partnership. This has been extended to cover the latest AMBA5 protocol for coherent operation (CHI-E) in addition to already supported options such as CHI-B, ACE and others. There are a couple of noteworthy points here. First, Arm’s new Automotive Enhanced (AE) cores upgraded protocol support from CHI-B to CHI-E and Arm/Arteris have collaborated to validate the Arteris Ncore coherent NoC generator against the CHI-E standard. Second, Arteris has also done the work to certify Ncore-generated networks with the CHI-E protocol extension for ASIL B and ASIL D. (Ncore-generated networks are already certified for earlier protocols, as are FlexNoC-generated non-coherent NoC networks.) In short, Arteris coherent and non-coherent NoC generators are already aligned against the latest Arm AE releases and ASIL safety standards. Which prompts the question: where are coherent and non-coherent NoCs required in automotive systems? Frank Schirrmeister (VP Solutions and Business Development at Arteris) helped clarify my understanding.

Automotive, datacenter/HPC system contrasts

Multi-purpose datacenters are highly optimized for task throughput per watt per $. CPU and GPU designs exploit very homogenous architectures for high levels of parallelism, connecting through coherent networks to maximize the advantages of that parallelism while ensuring that individual processors do not trip over each other on shared data. Data flows into and out of these systems through regular network connections, and power and safety are not primary concerns (though power has become more important).

Automotive systems architectures are more diverse. Most of the data comes from sensors – drivetrain monitoring and control, cameras, radars, lidars, etc. – streaming live into one or more signal processor stages, commonly implemented in DSPs or (non-AI) GPUs. Processing stages for object recognition, fusion and classification follow. These stages may be implemented through NPUs, GPUs, DSPs or CPUs. Eventually, processed data flows into central decision-making, typically a big AI system that might equally be at home in a datacenter. These long chains of processing must be distributed carefully through the car architecture to meet critical safety goals, low power goals and, of course, cost goals. As an example, it might be too slow to ship a whole frame from a camera through a busy car network to the central AI system, and then to begin to recognize an imminent collision. In such cases, initial hazard detection might happen closer to the camera, reducing what the subsystem must send to the central controller to a much smaller packet of data.

Key consequences of these requirements are that AI functions are distributed as subsystems through the car system architecture and that each subsystem is composed of a heterogenous mix of functions, CPUs, DSPs, NPUs and GPUs, among others.